Is open source r-selected?

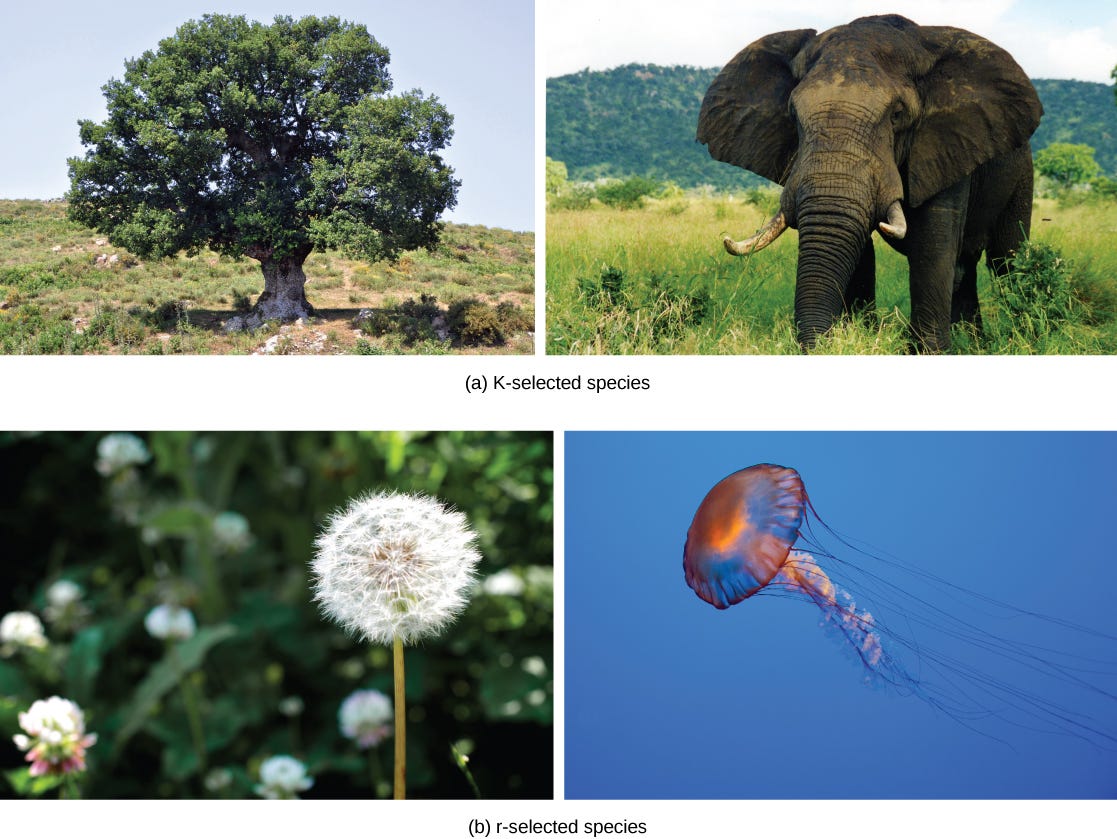

So there’s this idea from evolutionary ecology called r/K selection theory. The gist is that many species seem to converge toward one of two extreme survival strategies: r-selection, or K-selection.

r-selection: many offspring, low investment

K-selection: few offspring, high investment

r-selection: expand into open niches

K-selection: compete for crowded niches

r-selection: unlimited inputs (e.g. rabbits rarely run out of grass)

K-selection: scarce inputs (e.g. predators frequently face starvation)

r-selection: unstable habitats

K-selection: stable habitats

Basically swarms vs tanks, in video game terms.

This is a simplification. Not every species maps well to this heuristic, but r/K do seem to be interesting defensible points in the colonization-competition tradeoff.

So might there be such a thing as r/K selection in software? r/K is a survival and reproduction strategy, so a natural next question is, does software reproduce? Does it evolve? I think so!

One way software reproduces is through literally getting copied. npm install. Software modules can be composed and modified to produce new species. Technology evolves compositionally.

Another way software might reproduce is through memes. Concepts like hashmaps are reproduced across many programming languages. You can’t npm install Uber, but the concept of Uber can be reproduced and even mutated. If I say “Like Uber, but for kittens”, you kind of know what I mean. It’s part of the memepool.

Also, we know evolution happens in any system with heredity, mutation, selection.

Heredity: software is copied, either verbatim, or through memes.

Mutation: software is composed with other software, or modified.

Selection: some software gets used, some goes extinct.

So we can say software evolves. Perhaps it is even subject to r/K selection? I’d like to run with this analogy. What kind of software wants to be K-selected? What kind of software wants to be r-selected?

Aggregators seem K-selected. They are big, few in number. Millions in capital and human hours are poured into their care and raising. They are so K-selected that their survival strategy is functional immortality, rather than reproduction. Aggregators compete effectively within established niches, but may struggle to adapt to new environments (the innovator’s dilemma). K-selected species need stable environments, and aggregators limit variety to stabilize their environment and prevent disruption. Aggregators are like apex predators, and like many apex predators they are also a keystone species. Whole ecologies of influencers and creators depend upon them.

Programming languages and protocols also seem K-selected to me. They operate at the infrastructure pace layer, and last decades or more. Millions of hours of effort are poured into gardening and evolving them through RFCs, standards, PEPs. Protocols are like mangrove trees. Their growth constructs an ecology that supports many other species.

What about r-selected software?

Unix and C are the ultimate computer viruses.

(Richard Gabriel, 1989. “The Rise of Worse is Better”)

Is open source more like an elephant or a dandelion seed?

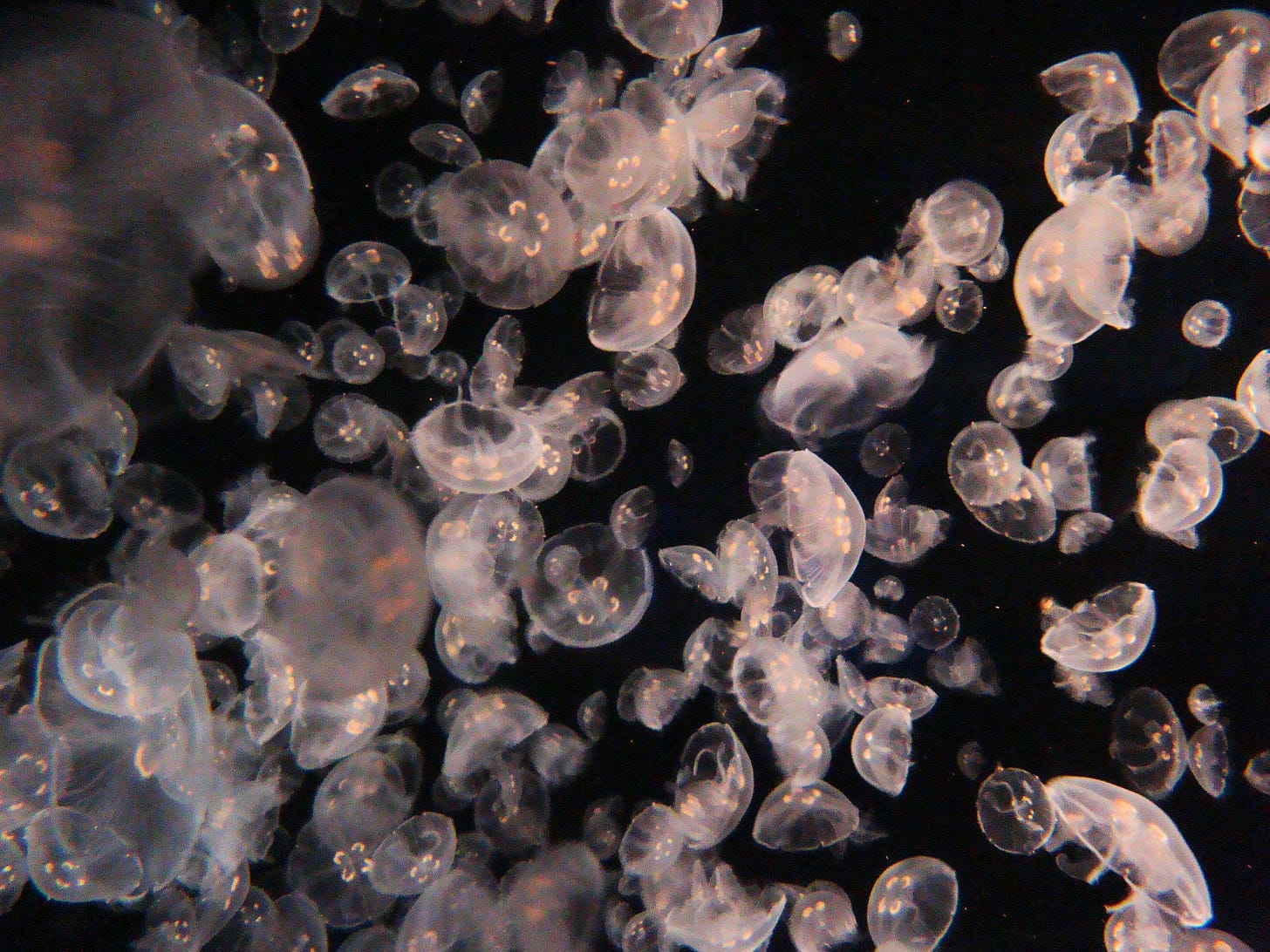

On the one hand, Linux feels like an elephant. On the other hand, the proliferation of one-function modules and micropackages on NPM sure feel like dandelion seeds. Take for example, is-even, a tiny module on NPM that gets hundreds of thousands of downloads per week.

var isEven = require('is-even');

isEven(0);This could have been done in one short line of code, but it is easier just to npm install. Reproductive success?

The amount of dependency churn within the npm ecosystem seems r-selected, too.

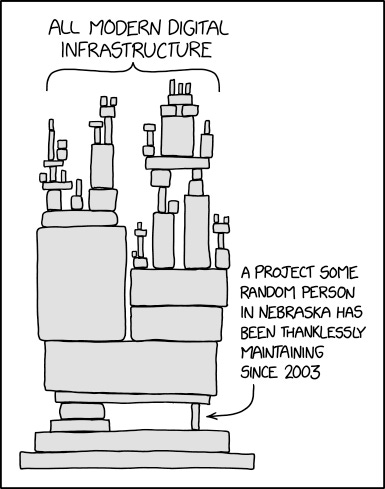

There is one major wrinkle in this narrative. r-selected species thrive where food is abundant. Rabbits rarely run out of grass, but open source is under constant threat of starvation.

Even very popular libraries subsist on starvation wages, or nothing at all. Maintainers struggle to keep up with requests. Burnout is common. Sometimes libraries get abandoned as a result. Not a great equilibrium.

While all this plays out, there is an asteroid hurtling toward us that seems likely to upend the whole ecosystem, for better and worse.

This is GitHub Copilot. It is AI code autocompletion.

It’s pretty easy to see where this is going. Consider output from newer, larger large language models, like DALL-E.

The coherence of these images is remarkable. More than good enough to provoke meaning-making. It feels like we’re about 90% of the way toward generating finished artwork, icons, essays, that are indistinguishable from the hand-authored thing. So how long before we can generate entire software libraries? Applications? One year? Five? Ten?

What does this do to open source, to software in general? If maintainer attention is the bottleneck for r-selected open source, what happens when LLMs start writing software?

Instead of K-selected libraries, might LLMs generate hundreds or millions of r-selected solutions?

Is maintenance necessary when you can generate new libraries from scratch? Will open source become so cheap you can throw it away?

Will LLMs generate small modules more reliably than big ones? If small libraries are more tractable, can LLMs also recursively compose those small modules into bigger ensembles, and so on?

How understandable will AI-generated code be? Is human-meaningful code the most effective approach? Will they prefer their own intermediate languages? Or will we have LLMs generate high performance bytecode directly?

Will large swaths of libraries end up having similar exploits due to artifacts of the LLM? What kinds of heuristic code analysis might we need to build on top? Or could LLMs generate security analysis too, like a kind generative adversarial network?

Will the rate of new niche discovery increase once we are able to rapidly generate programs and try new things?

Who owns these hypothetical LLMs? Will they become the next point of aggregation? I can picture AI-powered IDEs that have the strategic significance of game engines in the sense that you can’t build without them.

On the other hand, once you get past the high capital costs of training an LLM, it can churn out effectively infinite supply. And these models seem to be quickly simplified and copied once created. Perhaps multiple LLMs will compete and churn out software modules as a pure commodity. Perhaps an LLM that licenses output as open source might outcompete the others, since an open source module can be copied at zero marginal cost. r-selection.

r-selected species thrive in unstable habitats. K-selected species don’t do well in rapidly changing situations. A software ecosystem full of fast-breeding, new-niche-constructing software seems likely to be unstable. Might r-selected open source become a threat to K-selected aggregators?

Update 2022-07-18: @seefeld builds on these musings with two related preprints: Can OpenAI Codex and Other Large Language Models Help Us Fix Security Bugs? and Synchromesh: Reliable code generation from pre-trained language models.