Deep Future

AI-driven scenario planning

For the past couple of months, I have been working on Deep Future, an AI agent for scenario planning. It’s like Deep Research but for strategic foresight.

Picture this: you’re reading a news story about rare-earth minerals. Curious, you fire up the Deep Future agent and run a rapid analysis. In the time it takes to finish your morning cup of coffee, it has developed a detailed analysis covering supply chains, chips, defense tech, possible scenarios, a list of the forces driving change, early warning signals, and strategic leverage points. Deep Future has completed a multi-day strategic analysis in 15 min.

My research in this area has been supported by The Future of Life Foundation as part of the AI for Human Reasoning program. FLF believes AI can augment intelligence. I agree. If you follow this newsletter, you’ll know that I’ve long been interested in using AI to accelerate the OODA loop. Scenario planning is a promising place to start.

Thinking in scenarios

Everyone has a plan until they get punched in the face.

- Mike Tyson

Scenario planning emerged during the Cold War, when the US military and RAND started to adapt ideas from game theory and systems theory to make sense of the new strategic landscape. At its core, scenario planning tackles a fundamental challenge: how do you make plans in an unpredictable environment?

When your environment is predictable, strategy is simple. You identify a goal, plan steps toward your goal, carry them out. But our environment is seldom predictable. We live in a networked world, a world governed by asymmetry, feedback loops, and power laws. The US Army and Navy War College has an acronym for this kind of world: VUCA.

Volatile

Uncertain

Complex

Ambiguous

Plans that work in stable environments fail in VUCA environments. They are too brittle, because they depend upon prediction in an unpredictable world. VUCA environments demand a different approach. But if you can’t predict, how do you plan?

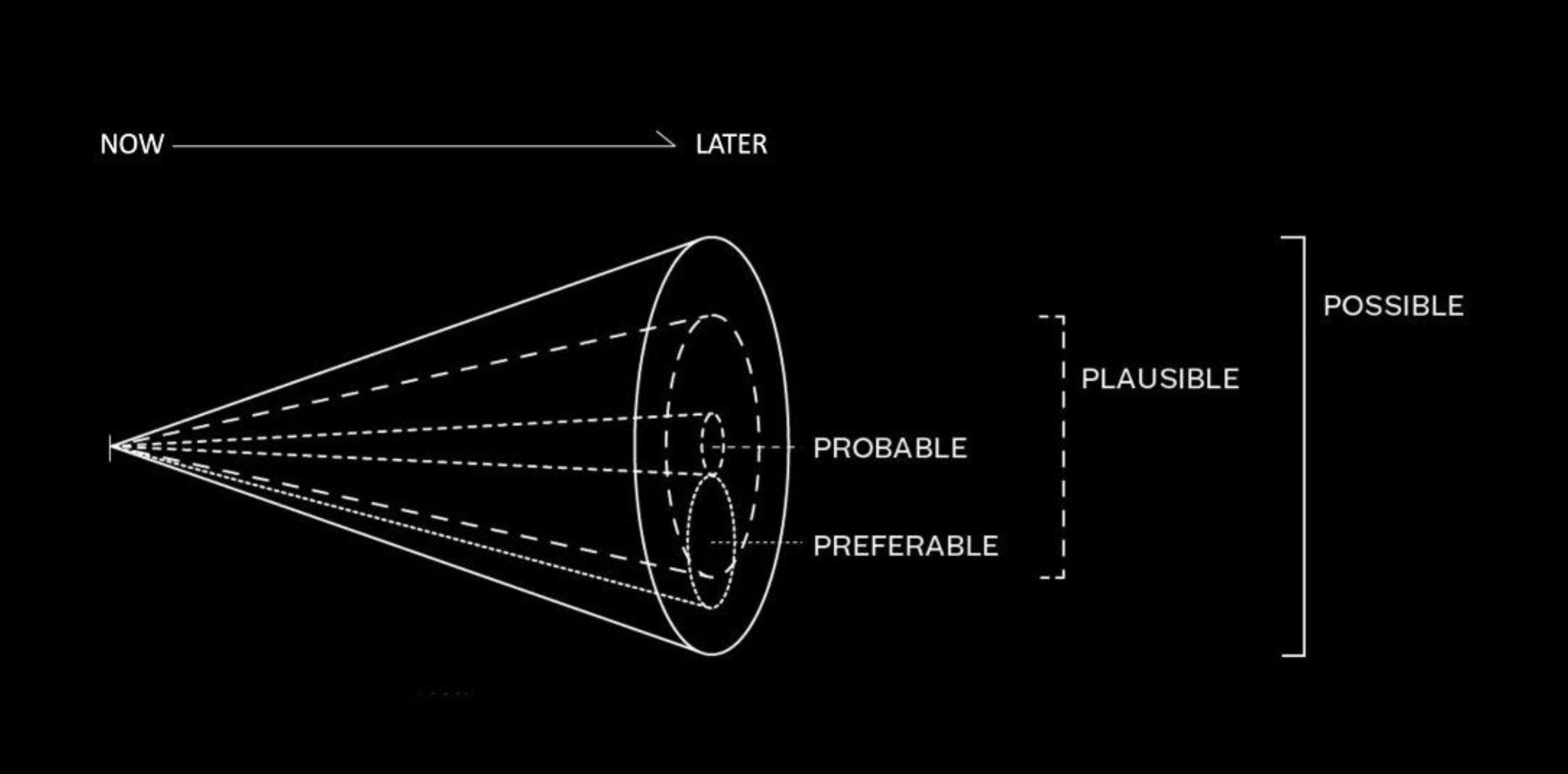

Picture your future as a cone. This cone represents the space of all possible outcomes: good, bad, everything in-between. The further into the future we go, the wider the cone of possibilities becomes.

Traditional planning traces a single path through this cone, the preferable path, a direct path toward your goal. But this is like drawing a line between two points and claiming you’ve drawn a map. Sure, we can follow that line and hope for the best, but the line doesn’t give us any sense of scale or orientation. It doesn’t tell us anything about the territory. While following that line, we might run into mountains, get mired in swamps, or walk off of cliffs. Worse still, the further we get into the future, the less predictive the line will be! Any errors will compound, until we find ourselves way off of our intended course.

Drawing one line isn’t enough. The preferable path articulates what we hope will happen, but hope is not a strategy. We need to map more territory! This is where scenario planning comes in.

Scenario planning traces multiple paths through the cone of possibility. Each of these paths cuts through a different part of the cone. Between them, we end up covering a broad radius of potential outcomes. Tracing these paths is not about predicting. It is about exploring the space of the probable, plausible, and possible.

How does this work?

The future is already here, it’s just not evenly distributed.

-William Gibson

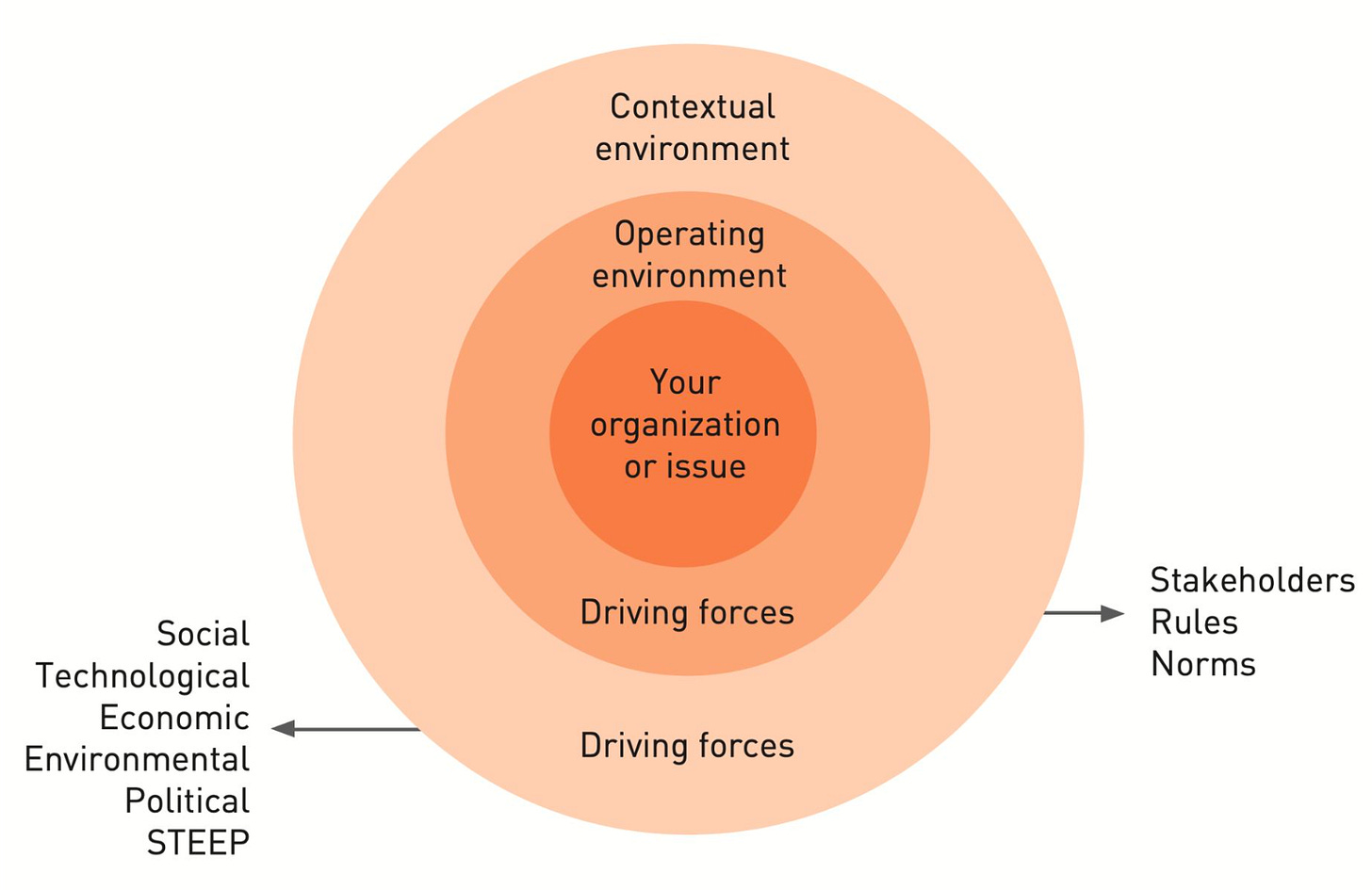

We can’t predict the future, but we can identify the forces that are driving change in our environment today. This process of mapping drivers is not speculative. Drivers are trends, issues, projections, obstacles that we can point to, that are happening now, things like demographic changes, technological adoption curves, economic bubbles, environmental tipping points, political transitions… These forces have mass and momentum. If we see them driving change today, we know they will drive change tomorrow.

Systems fool us by presenting themselves as a series of events.

-Donella Meadows

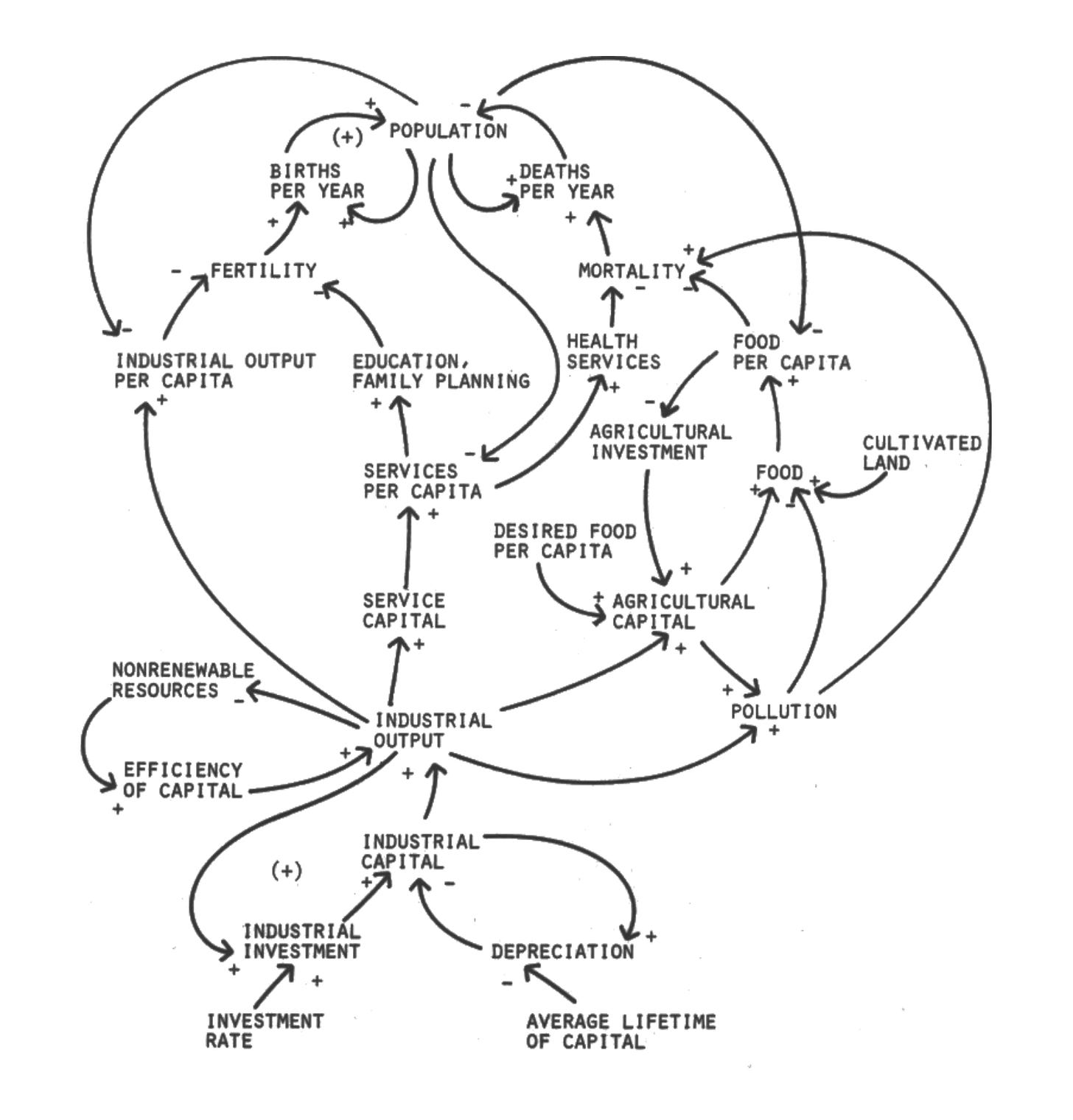

Once we have mapped the relevant forces, we can begin exploring how they are connected into feedback loops that generate exponential growth, collapse, stasis, or transformation. We can also look at how forces sort into pace layers, revealing what is changing quickly, and what is changing slowly. Where fast and slow collide, we find pivot points.

Analyzing forces as systems can help us break out of the trap of linear thinking:

The human mind is not adapted to interpreting how social systems behave. Social systems belong to the class called multi-loop nonlinear feedback systems. In the long history of evolution it has not been necessary until very recent historical times for people to understand complex feedback systems. Evolutionary processes have not given us the mental ability to interpret properly the dynamic behavior of those complex systems in which we are now embedded.

(Jay Forrester, 1971. “Counterintuitive behavior of social systems”)

The complex processes we call “systems” present special challenges to our uneducated imaginations. We tend to think additively, and are constantly surprised when something that seems to be “just added in” causes surprising and often disastrous changes. For example, in 1859 in Australia, Thomas Austin said “The introduction of a few rabbits could do little harm and might provide a touch of home, in addition to a spot of hunting.” The result was not the Australian ecology + rabbits, but an entirely new ecology, which in many cases became a landscape of ruins. None of the efforts since then to “subtract” the rabbits from the ecology have come close to working.

(Alan Kay, 2005. “Enlightened Imagination For Citizens”)

Twice the cause does not mean twice the effect. Actual results will depend upon system structure. Mapping the relationships between forces reveals places where nonlinear change is likely to occur.

Some confluences of forces will be so powerful that they form the outline of an obvious scenario—a large-scale outcome driven by the interaction of multiple forces. After identifying a few of these scenarios, we can triangulate between multiple possible outcomes, and form strategies that are robust across many futures. We can even create multiple plans, contingency plans, that we can deploy if we see ourselves moving toward one scenario or another.

Instead of planning from the inside-out, projecting our hopes onto the environment, we are now planning from the outside-in. We’re mapping the possibility space, then charting paths through it!

It’s a process that produces surprising insights. My favorite thing about scenario planning is how it changes my understanding of the present.

Accelerating scenarios with AI

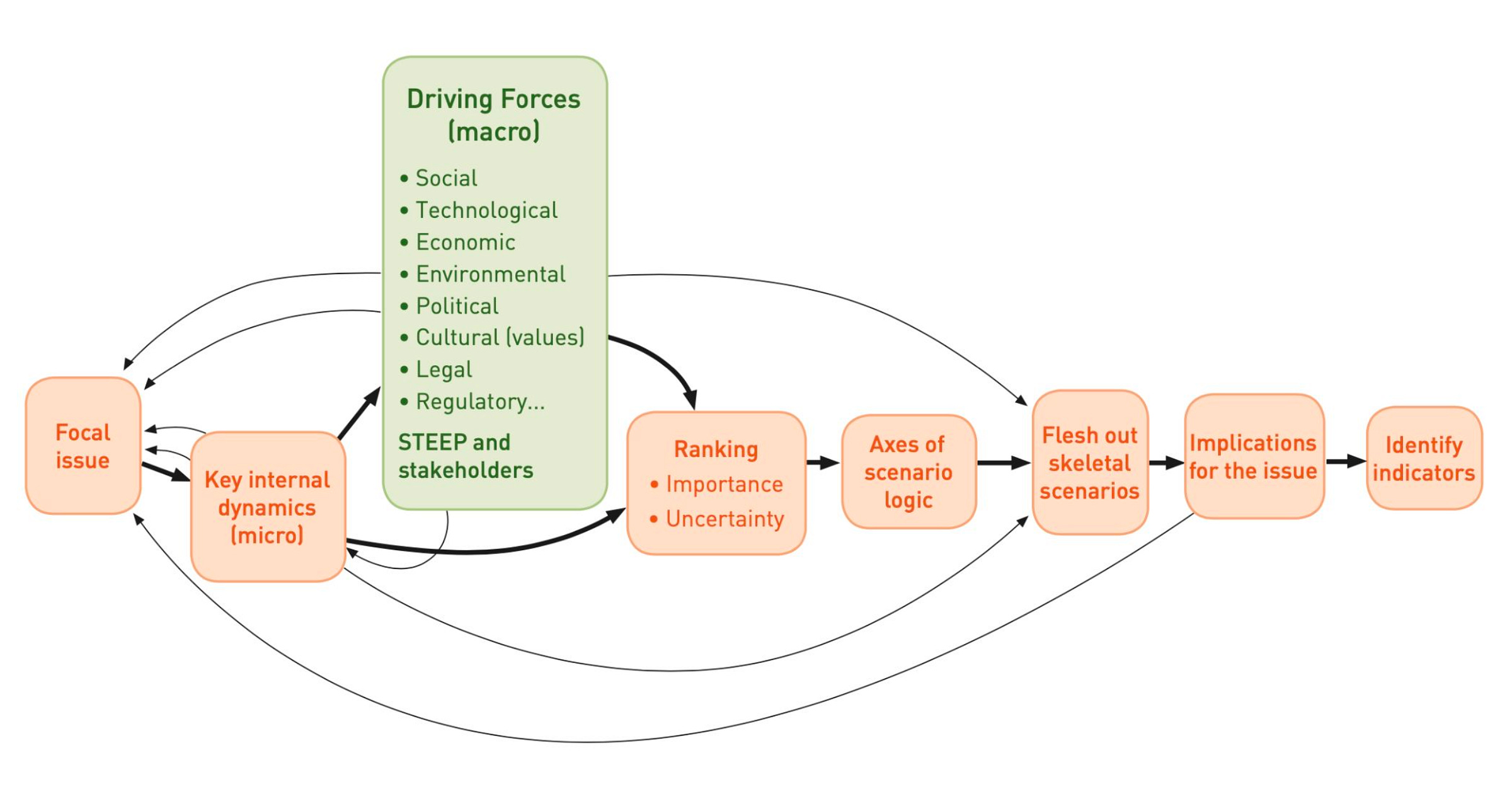

So where does AI come in? Scenario planning goes through several structured research stages:

Framing: Establish a focal question with clear timeframe and scope to guide our research (e.g. “what will cancer research look like in 10 years?”).

Environmental scanning: Catalog the forces driving change across multiple areas (Social, Technological, Economic, Environmental, Political…). Map major actors in the environment. Build up a database of research.

Structural analysis: Perform various structural analyses, looking at actors and forces, how they interact. Rank forces by impact and uncertainty, pace layer, etc.

Scenario identification: Identify a cluster of pivotal forces, typically high-impact forces with uncertainties related to our focal question. Explore how these forces might collide, e.g. by crossing forces up in a 2x2 matrix, or by doing a cross-impact analysis. This yields four or more windows into possible futures.

Scenario development: With four or more representative scenarios identified, explore scenarios in-depth. Develop descriptions that help us understand what these futures might look like, how actors might behave within them, what this implies for our focal question. Techniques like wargaming can also be used to dynamically explore scenarios.

Signpost monitoring: Develop a list of indicators that act as early warning signals for one scenario or another. Track them regularly.

Strategic development: Identify strategies to meet threats and achieve goals across a wide range of scenarios. Develop contingency plans. Identify new uncertainties and questions for future research.

Digging into these stages reveals multiple opportunities for AI to automate or augment the research process. Agents can help search for signals during environmental scanning, work with you to identify forces, perform automated analysis, explore thousands of scenarios, monitor signposts, and more. The data we generate during scanning acts as a ground-truthing mechanism, allowing us to trace our assumptions all the way back to the scanning hits—facts, events, trends—that started our analysis.

Scenario planning has well-developed research methods and processes (TAIDA, Houston Framework Foresight, etc). At the same time, these processes are not simple, fixed, or linear. We might need to change the methods we use within a stage depending on the question, the number of participants, and the context. We might loop back to earlier stages as we learn from our research. It’s the same kind of fluid domain faced by Deep Research:

Research work involves open-ended problems where it’s very difficult to predict the required steps in advance. You can’t hardcode a fixed path for exploring complex topics, as the process is inherently dynamic and path-dependent. When people conduct research, they tend to continuously update their approach based on discoveries, following leads that emerge during investigation.

This unpredictability makes AI agents particularly well-suited for research tasks. Research demands the flexibility to pivot or explore tangential connections as the investigation unfolds. The model must operate autonomously for many turns, making decisions about which directions to pursue based on intermediate findings. A linear, one-shot pipeline cannot handle these tasks.

(Anthropic, 2025. “How we built our multi-agent research system”.)

This kind of dynamic process requires a facilitator with strong intuitions for when to move forward, when to loop back, and when to dive deeper. As Deep Research has shown, agents are surprisingly good at this!

I’m still early in the development process, but the prototypes already feel promising. Deep Future agents are equipped with a MemGPT-like memory system, a handful of tools, and a library of prompts adapted from scenario methods. They can successfully guide you through defining a focal question, identifying forces, analyzing structural connections, and making strategic recommendations. I’m pretty happy with the early results—not as good as an experienced facilitator, but then speed has a quality all its own.

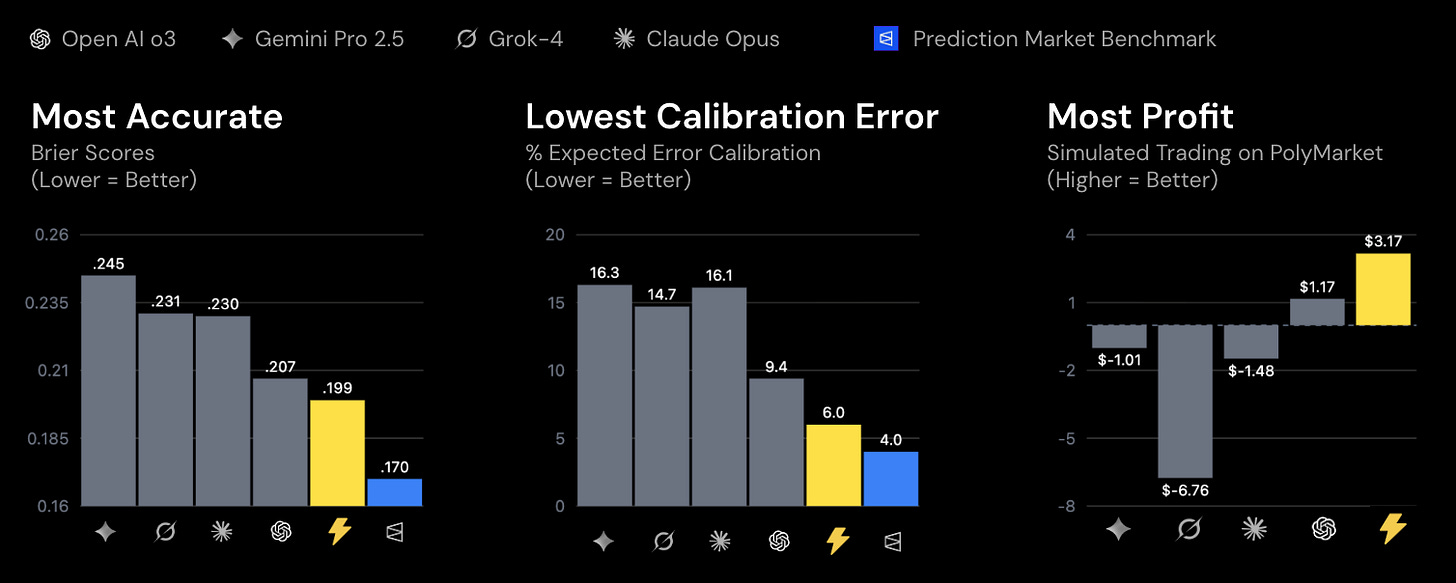

There is also a lot of headroom for improvement. LLMs are getting better at forecasting, a skillset that has critical overlap with strategic foresight. We may soon have LLMs that can predict as accurately as a superforecaster.

And there are areas where AI can already outperform us. Agents are patient. They never sleep. They can do continual environmental scanning, ingesting news feeds, identifying driving forces, updating scenario models, and sending notifications the very millisecond an early warning signal is detected. This kind of signals intelligence used to require a dedicated team of researchers. Now AI brings it within reach of individuals.

Ultimately, by dropping the cost of scenario analysis from days to minutes, we can begin to do high-frequency scenario planning, applying the power of scenario-thinking to more questions, more often.

I’m developing Deep Future alongside a limited number of exclusive founding partners. Interested? Reply to this email, or join the waitlist.